Scraping: Python tools and modules for scraping (updated)

Last update: 2022.03.28

Get HTML from server

urllib.request

- standard module, preinstalled with Python

- some operations need more code than

requests - it has

urlretrive()to download file

Requests

- popular module which makes life easier

- extensions and modifications

Search data in HTML

BeautifulSoup

- it uses

cssor own functions which can useregex - it doesn't use

xpath

lxml

- it uses

xpath - it doesn't use

css

Parsel

- it uses

cssorxpathwithregex - it is used by

Scrapy

cssselector

- it converts

csstoxpathand uselxmlto search

pyquery

- it uses selectors like

jquery. - it uses pseudo classes which doesn't exist in

cssie.:first:last:even:odd:eq:lt:gt:checked:selected:file

Scraping framework(s)

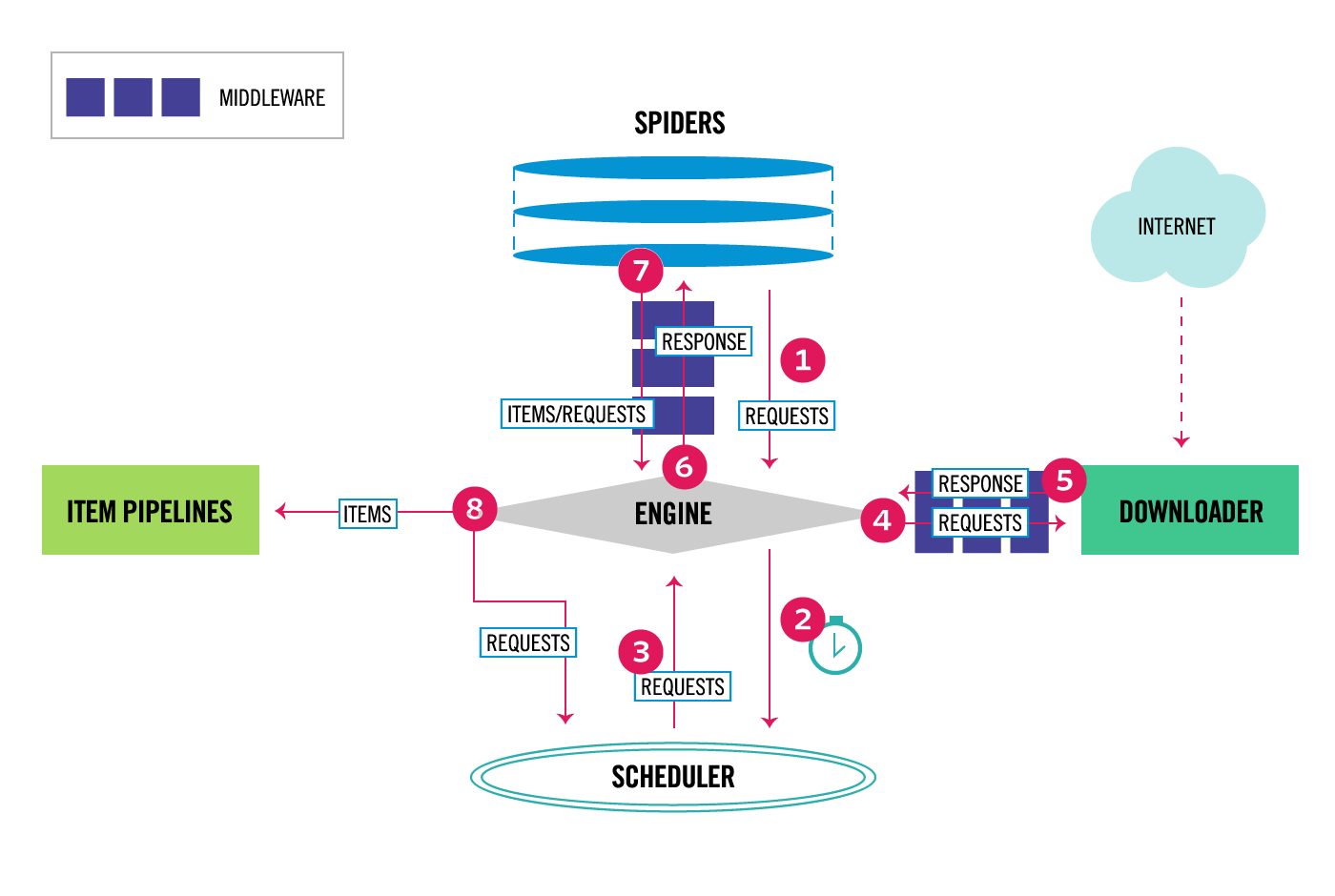

Scrapy

- it uses

xpathandcss selectors - it can run many processes at the same time

- it can use proxies

- it can be used on servers zyte.com

- extension to work with selenium: scrapy-selenium

Source: https://doc.scrapy.org/en/latest/topics/architecture.html

MechanicalSoup

- it is

Requests+BeautifulSoup

RobotFramework

RoboBrowser

mechanize

Work with JavaScript

If page uses JavaScript then you may need one of this modules which can control real web browser

Selenium

- it controls real web browser which can run JavaScript

- use

xpath,css selectors - drivers

- standard driver for Chrome: chromedriver

- standard driver for Firefox: geckodriver

- standard driver for Edge: edge driver

- special driver for Chrome: undetected chromedriver

- module to automatically download driver: webdriver-manager

See also selenium.dev

pyppeteer

playwright

Other tools for scraping

Portia

dockerwith tool that allows for visually scraping.- created by authors of

Scrapy

Other tools for help

httpbin.org

- it can be used to test HTTP requests

- it sends back all data which it gets so you check if your requests creates correct data.

ToScrape.com

Web Scraping Sandbox with two fictional pages/portals which you can use it to learn scraping.

There are examples with:

- normal pagination

- infinite scrolling pagination

- JavaScript generated content

- a table based messed-up layout

- login with CSRF token (any user/passwd works)

ViewState(C# DotNet)

curlconverter.com

- can convert

curlcommand to code in Python (requests) or other languages - some (API) documentation show examples as command

curl - some conversion may have mistake

Older link curl.trillworks.com

Similar:

- reqbin.com

-

curl2scrapy (convert for module

scrapy) -

tools

PostmanandInsomnialso can generate code for Python

"DevTools" in Chrome and Firefox

tab: Inspecion- to search items in HTML and getCSSorXPathselector. (but it gives selector which doesn't use classes and ids so it can be long and unreadable for human)tab: Network- to see all requests from browser to server and get requests used by JavaScript to gettab: Console- to test JavaScript code or use$("...")to testcss selectoror$x("...")to testxpath- extensions:

- button to turn off JavaScript:

Extra doc for Firefox

Tools which can be used to test requests and API

They can also generate code in python using urllib or requests

- charlesproxy - local proxy server

- mitmproxy - local proxy server (created in Python)

By The Way

pandas.read_html()

can read HTML from file or url and scrape data from all standard <table> and create list with DataFrames

Example codes for different pages and different tools on my GitHub:

furas.pl

furas.pl